PhD student at Peking University.

PhD student at Peking University.I'm a Ph.D. student at the Institute for Artificial Intelligence, Peking University (PKU), advised by Prof. Yitao Liang. I also collaborate closely with Dr. Xiaojian Ma and Prof. Anji Liu. Before joining PKU, I earned my MSc and BA degrees in Control Science and Technology from Beijing Institute of Technology.

My research centers on building open-ended generalist agents, including computer-use agents, embodied game agents, and deep research agents. My core interest lies in building and leveraging large pre-trained Foundation Models (LLMs, VLMs, VLA) to significantly enhance agent generalization capabilities. My research contributions fall into two main categories:

- Agentic Workflow: DEPS (Planning Agent), JARVIS-1 (Self-improving Agent with Multimodal Memory), RAT (Open Deep Research Agent), ROCKET-1 (Embodied Agent with GUI action space), ProAgent (Collaborating LLM-based Game Agents).

- Agentic Foundation Models: UI-TARS-1.5 (An open-source multimodal GUI and Game agent built upon a powerful vision-language model), OmniJARVIS (Hierarchical VLA with latent action space), JARVIS-VLA (A vision-language-action model in open worlds).

Warning

Problem: The current name of your GitHub Pages repository ("Solution: Please consider renaming the repository to "

http://".

However, if the current repository name is intended, you can ignore this message by removing "{% include widgets/debug_repo_name.html %}" in index.html.

Action required

Problem: The current root path of this site is "baseurl ("_config.yml.

Solution: Please set the

baseurl in _config.yml to "Education

-

Peking UniversityInstitute for Artificial Intelligence

Peking UniversityInstitute for Artificial Intelligence

Ph.D. CandidateSep. 2022 - present -

Beijing Institute of TechnologySchool of Automation

Beijing Institute of TechnologySchool of Automation

M.S. in Control Science and TechnologySep. 2019 - Jul. 2022 -

Beijing Institute of TechnologySchool of Automation

Beijing Institute of TechnologySchool of Automation

B.Eng. in AutomationSep. 2015 - Jul. 2019

Experience

-

ReviewerICML, NeurIPS, ICLR, CVPR, ECCV, AAAI.2021 - present

-

Research InternAlibaba Inc.May. 2021 - Aug. 2021

Honors & Awards

-

Best Paper Award, ICML 2023 TEACH Workshop2023

-

Chinese National Scholarship2021

-

Outstanding Graduate of Beijing2019

-

Autonomy Prize of Indoor Event on 10th International Micro Air Vehicle Competition and Conference, Melbourne.2018

-

Meritorious Winner on American Mathematical Contest In Modeling (MCM)2018

News

Selected Publications (view all )

JARVIS-VLA: Post-Training Large-Scale Vision Language Models to Play Visual Games with Keyboards and Mouse

Muyao Li*, Zihao Wang*, Kaichen He, Xiaojian Ma, Yitao Liang (* equal contribution)

ACL Findings 2025

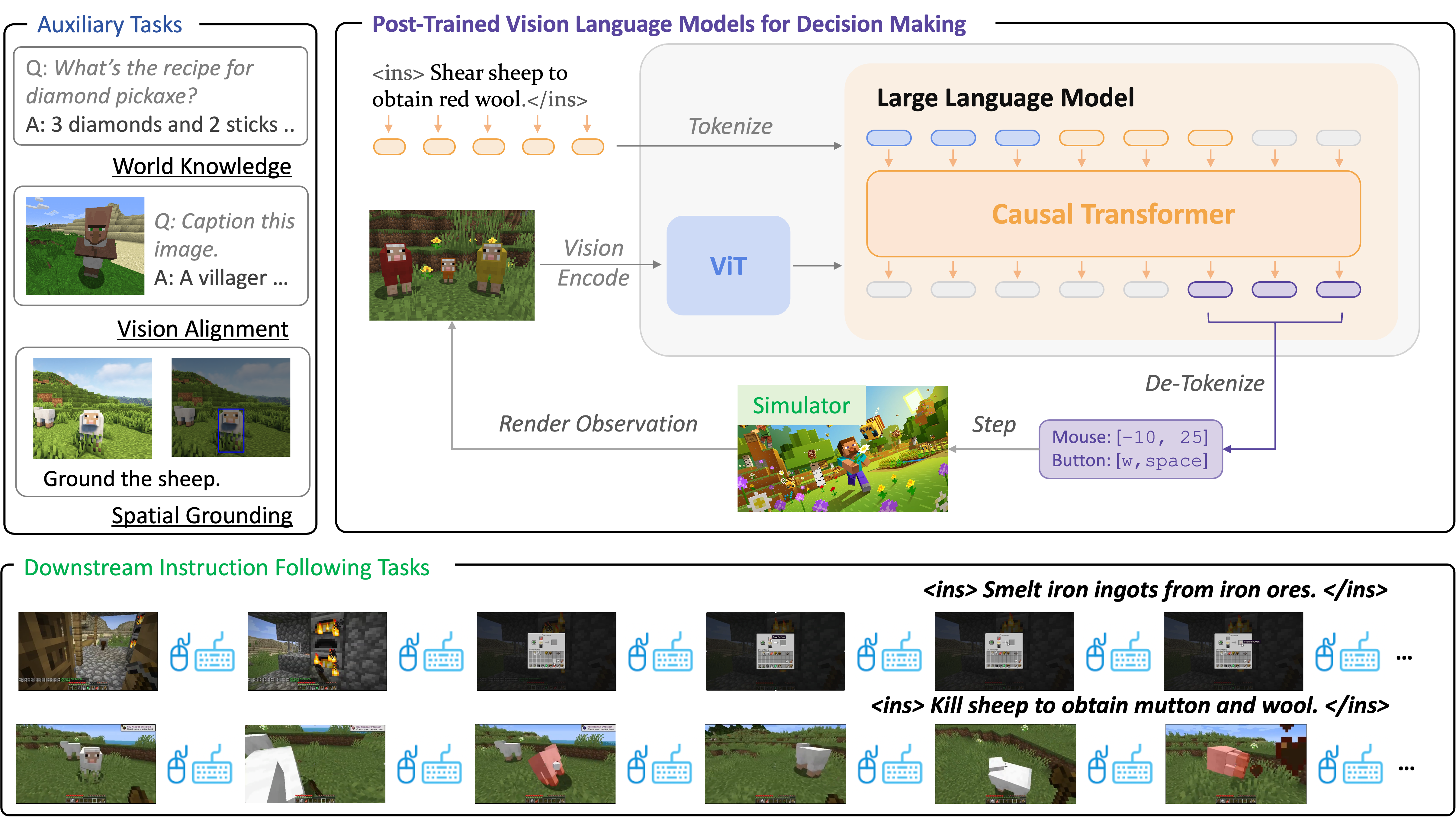

Visual Language Action (VLA) models, pretrained on large-scale web datasets, have shown promise in decision-making tasks. However, previous work has primarily focused on action post-training, often neglecting enhancements to the foundational model itself. In response, we introduce a novel approach, Act from Visual Language Post-Training, which refines Visual Language Models (VLMs) through visual and linguistic guidance in a self-supervised manner. This enhancement improves the models' capabilities in world knowledge, visual recognition, and spatial grounding in open-world environments. Following the above post-training paradigms, we obtain the first VLA models in Minecraft that can follow human instructions on over 1k different atomic tasks, including crafting, smelting, cooking, mining, and killing.

JARVIS-VLA: Post-Training Large-Scale Vision Language Models to Play Visual Games with Keyboards and Mouse

Muyao Li*, Zihao Wang*, Kaichen He, Xiaojian Ma, Yitao Liang (* equal contribution)

ACL Findings 2025

Visual Language Action (VLA) models, pretrained on large-scale web datasets, have shown promise in decision-making tasks. However, previous work has primarily focused on action post-training, often neglecting enhancements to the foundational model itself. In response, we introduce a novel approach, Act from Visual Language Post-Training, which refines Visual Language Models (VLMs) through visual and linguistic guidance in a self-supervised manner. This enhancement improves the models' capabilities in world knowledge, visual recognition, and spatial grounding in open-world environments. Following the above post-training paradigms, we obtain the first VLA models in Minecraft that can follow human instructions on over 1k different atomic tasks, including crafting, smelting, cooking, mining, and killing.

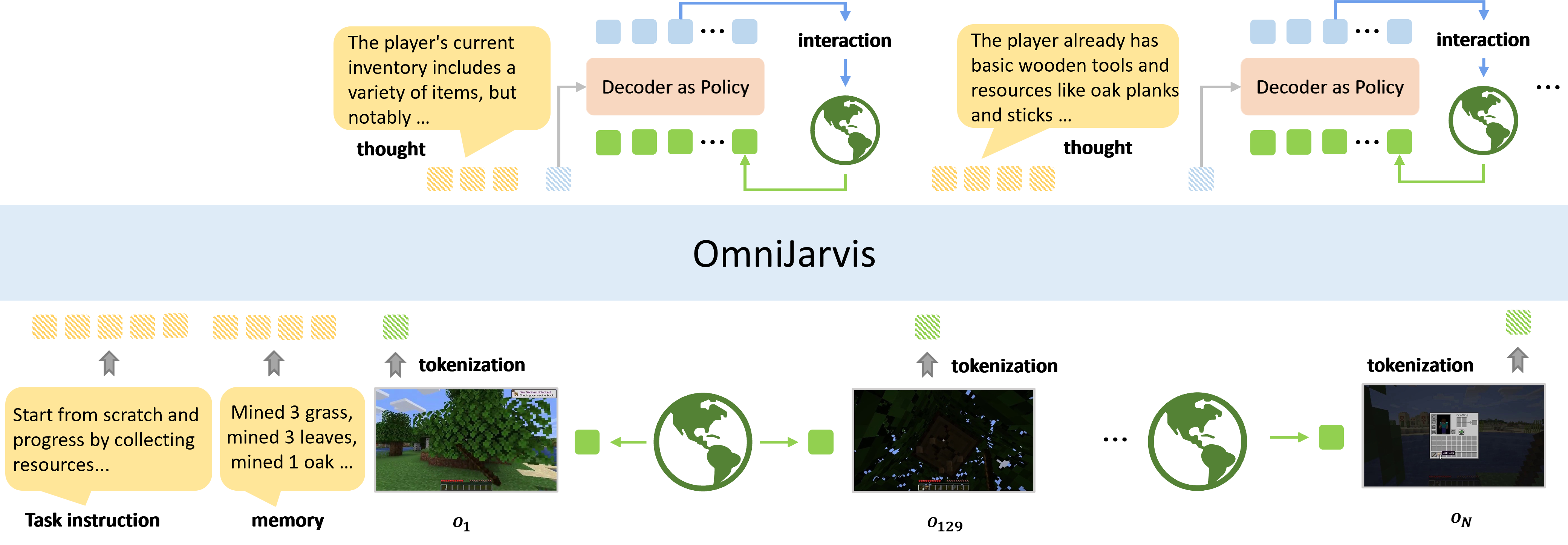

OmniJARVIS: Unified Vision-Language-Action Tokenization Enables Open-World Instruction Following Agents

Zihao Wang, Shaofei Cai, Zhancun Mu, Haowei Lin, Ceyao Zhang, Xuejie Liu, Qing Li, Anji Liu, Xiaojian Ma, Yitao Liang

NeurIPS 2024

An end-to-end open-ended agent based on Vision-Language-Action (VLA) models with self-supervised behavior tokenizer, that can answer questions and follow instructions in open-world Minecraft.

OmniJARVIS: Unified Vision-Language-Action Tokenization Enables Open-World Instruction Following Agents

Zihao Wang, Shaofei Cai, Zhancun Mu, Haowei Lin, Ceyao Zhang, Xuejie Liu, Qing Li, Anji Liu, Xiaojian Ma, Yitao Liang

NeurIPS 2024

An end-to-end open-ended agent based on Vision-Language-Action (VLA) models with self-supervised behavior tokenizer, that can answer questions and follow instructions in open-world Minecraft.

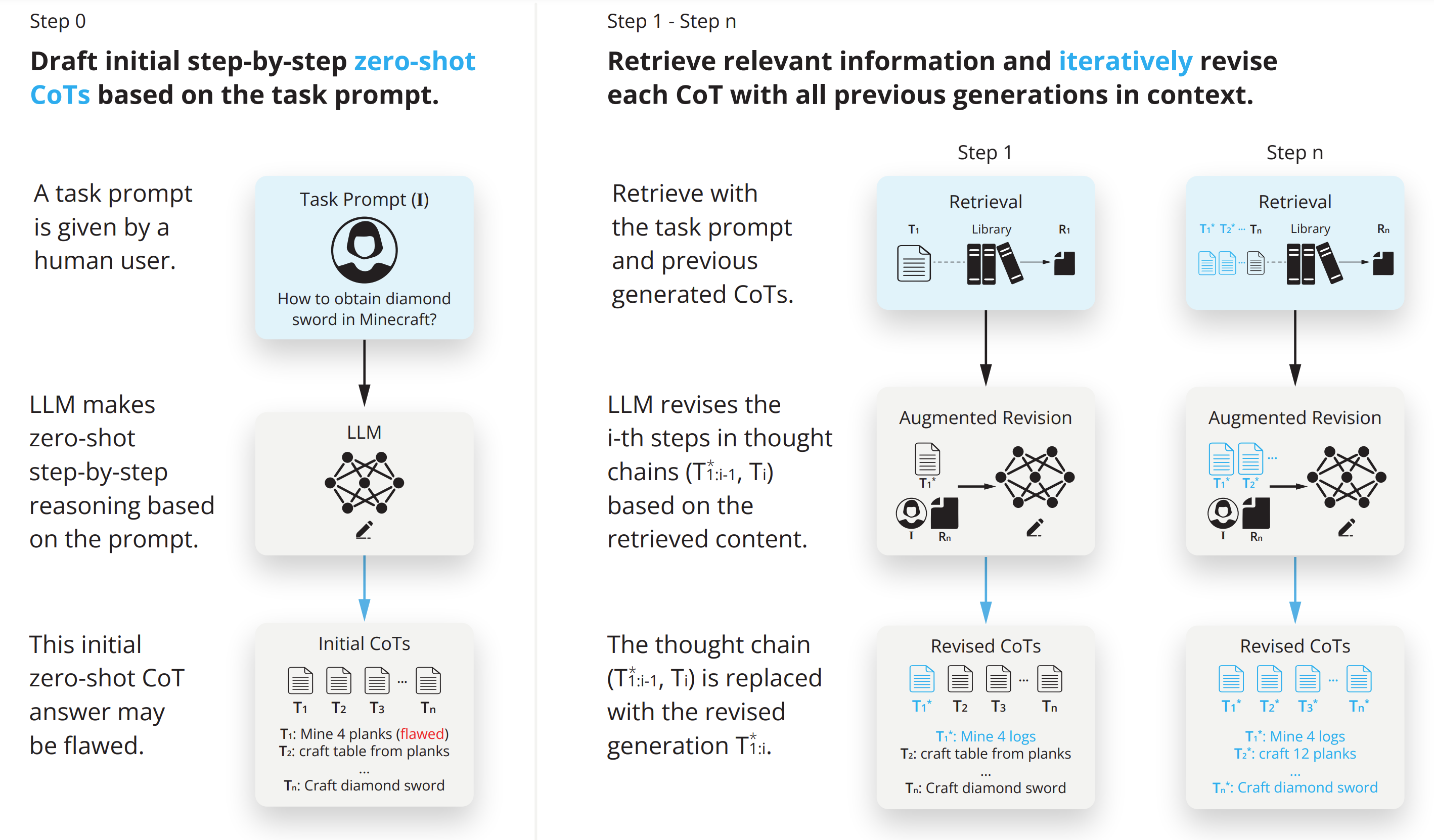

RAT: Retrieval Augmented Thoughts Elicit Context-Aware Reasoning in Long-Horizon Generation

Zihao Wang, Anji Liu, Haowei Lin, Jiaqi Li, Xiaojian Ma, Yitao Liang

NeurIPS Workshop 2024

An agent with retrieval-augmented thought that can conduct code generation, math reasoning, embodied planning and open-ended question answering.

RAT: Retrieval Augmented Thoughts Elicit Context-Aware Reasoning in Long-Horizon Generation

Zihao Wang, Anji Liu, Haowei Lin, Jiaqi Li, Xiaojian Ma, Yitao Liang

NeurIPS Workshop 2024

An agent with retrieval-augmented thought that can conduct code generation, math reasoning, embodied planning and open-ended question answering.

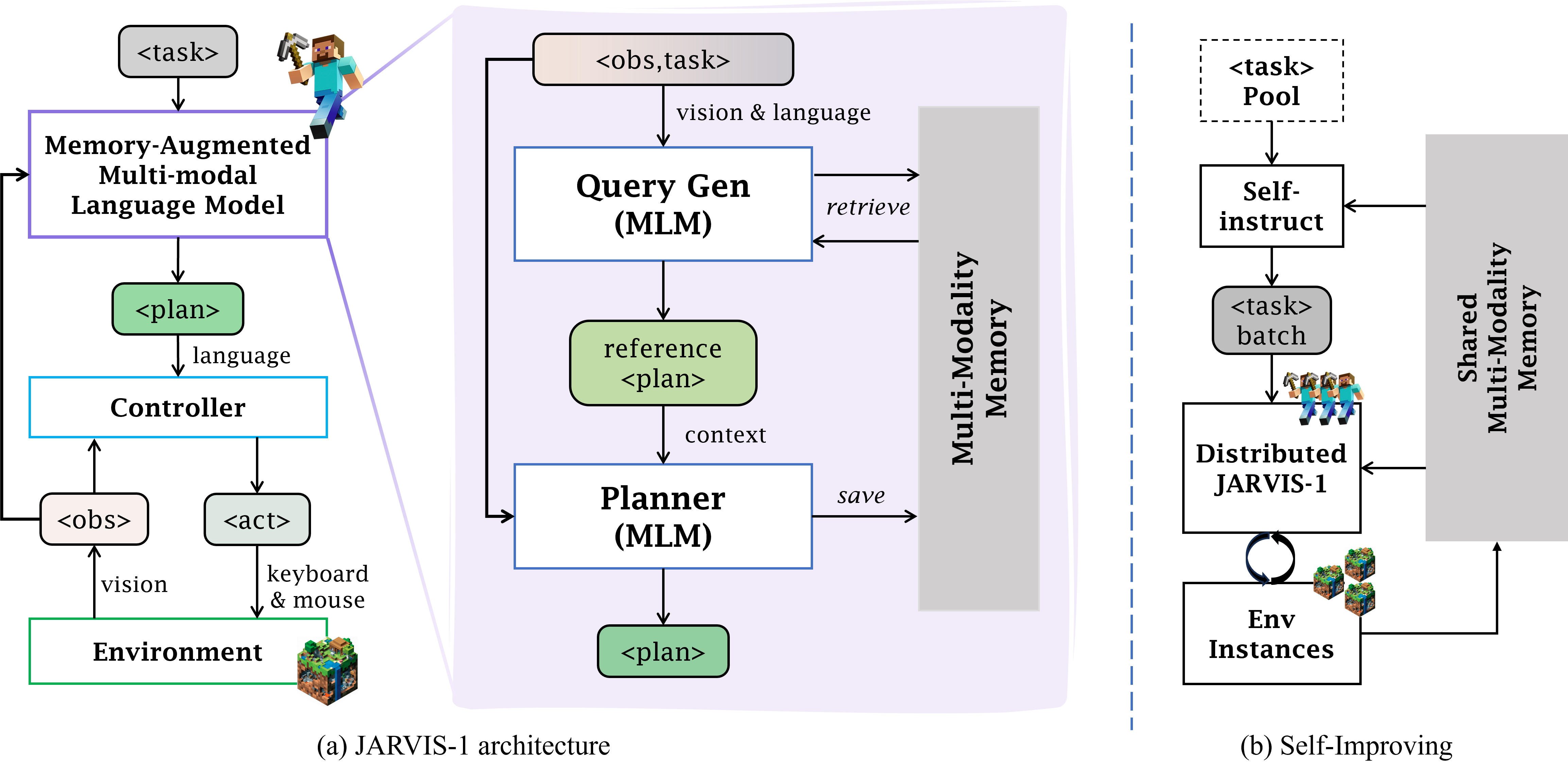

JARVIS-1: Open-World Multi-task Agents with Memory-Augmented Multimodal Language Models

Zihao Wang, Shaofei Cai, Anji Liu, Yonggang Jin, Jinbing Hou, Bowei Zhang, Haowei Lin, Zhaofeng He, Zilong Zheng, Yaodong Yang, Xiaojian Ma, Yitao Liang

T-PAMI 2024

A multi-task agent that can self-improve in open-ended Minecraft and accomplish up to 200+ tasks.

JARVIS-1: Open-World Multi-task Agents with Memory-Augmented Multimodal Language Models

Zihao Wang, Shaofei Cai, Anji Liu, Yonggang Jin, Jinbing Hou, Bowei Zhang, Haowei Lin, Zhaofeng He, Zilong Zheng, Yaodong Yang, Xiaojian Ma, Yitao Liang

T-PAMI 2024

A multi-task agent that can self-improve in open-ended Minecraft and accomplish up to 200+ tasks.

Describe, Explain, Plan and Select: Interactive Planning with Large Language Models Enables Open-World Multi-Task Agents

Zihao Wang, Shaofei Cai, Guanzhou Chen, Anji Liu, Xiaojian Ma, Yitao Liang

NeurIPS 2023 Best Paper Award, ICML 2023 TEACH Workshop

We investigate the challenge of task planning for multi-task embodied agents in open-world environments. We propose"Describe, Explain, Plan and Select"(DEPS), an interactive planning approach based on Large Language Models. DEPS facilitates better error correction on initial LLM-generated plan by integrating description of the plan execution process and providing self-explanation of feedback when encountering failures during the extended planning phases. Furthermore, it includes a goal selector, which is a trainable module that ranks parallel candidate sub-goals based on the estimated steps of completion, consequently refining the initial plan. Our experiments mark the milestone of the first zero-shot multi-task agent that can robustly accomplish 70+ Minecraft tasks and nearly double the overall performances.

Describe, Explain, Plan and Select: Interactive Planning with Large Language Models Enables Open-World Multi-Task Agents

Zihao Wang, Shaofei Cai, Guanzhou Chen, Anji Liu, Xiaojian Ma, Yitao Liang

NeurIPS 2023 Best Paper Award, ICML 2023 TEACH Workshop

We investigate the challenge of task planning for multi-task embodied agents in open-world environments. We propose"Describe, Explain, Plan and Select"(DEPS), an interactive planning approach based on Large Language Models. DEPS facilitates better error correction on initial LLM-generated plan by integrating description of the plan execution process and providing self-explanation of feedback when encountering failures during the extended planning phases. Furthermore, it includes a goal selector, which is a trainable module that ranks parallel candidate sub-goals based on the estimated steps of completion, consequently refining the initial plan. Our experiments mark the milestone of the first zero-shot multi-task agent that can robustly accomplish 70+ Minecraft tasks and nearly double the overall performances.